Toolkit Development overview

Prerequisite

This toolkit uses Apache Ant 1.8 (or later) to build.

Internally Apache Maven 3.2 (or later) and Make are used.

Download and setup directions for Apache Maven can be found here: http://maven.apache.org/download.cgi#Installation

Set environment variable M2_HOME to the path of maven home directory.

export M2_HOME=/opt/apache-maven-3.5.0

export PATH=$PATH:$M2_HOME/bin

Build the toolkit

Run the following command in the streamsx.objectstorage directory:

ant all

Build the samples

Run the following command in the streamsx.objectstorage directory to build all projects in the samples directory:

ant build-all-samples

Toolkit Architecture Overview

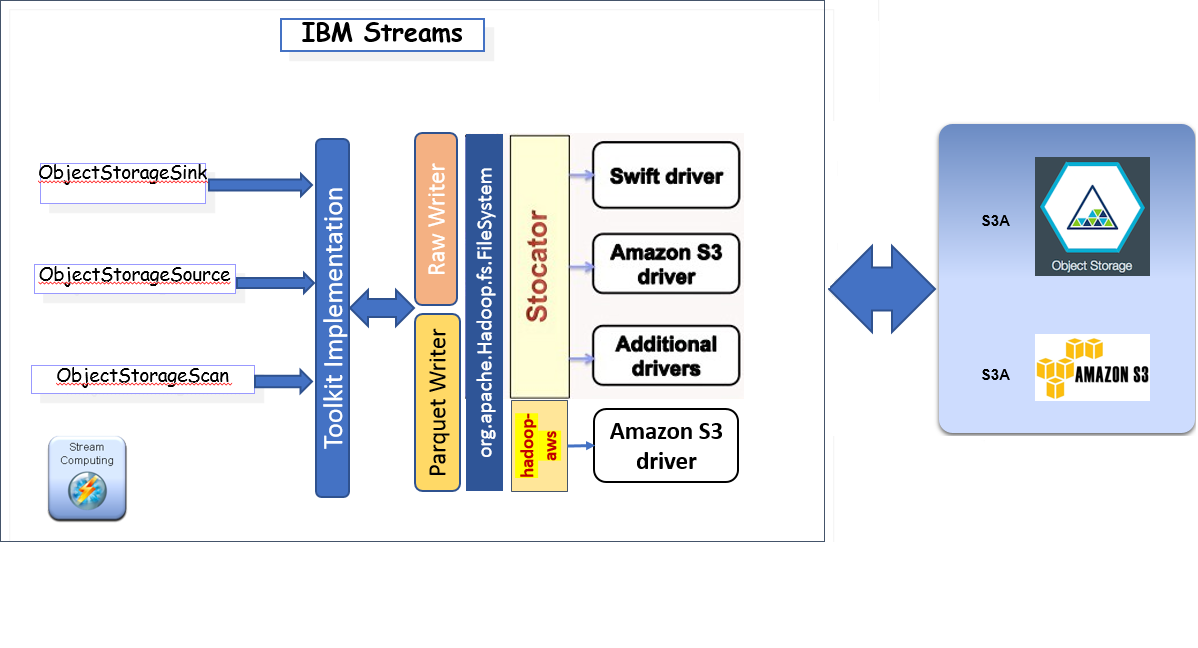

Following diagram represents the toolkit high-level architecture:

As might be seen, the toolkit implemented on top of

As might be seen, the toolkit implemented on top of org.apache.hadoop.fs.FileSystem.

This allowed to inherit some of the HDSF Toolkit logic.

Yet, as in many aspects Object Storage behaves differently from HDFS, the current

toolkit version contains significant number of adoptions and optimizations which are

unique for COS.

Also, the diagram represents two different clients which utilized by the toolkit:

hadoop-aws is an open-source S3 connector implementing org.apache.hadoop.fs.FileSystem.

hadoop-aws has a unique and very powerful S3A Fast Upload

feature heavily utilized by the ObjectStorageSink operator. The feature allows uploading of large files as blocks

with the size set by fs.s3a.multipart.size, buffering of object multipart blocks to the local disk as well as

upload the mutlipart blocks in parallel in background thread.

stocator is an open-source connector to object storage. In this toolkit the stocator connector interacts directly with object stores using connectors optimized for IBM COS.

Note, that parquet storage format is implemented with parquet-mr. To be more specific, the parquet-hadoop module is used.

The toolkit user may easily switch from hadoop-aws to stocator client by specifying appropriate protocol in the objectStorageURI

parameter of the ObjectStorageSink operator or in the protocol parameter of the S3ObjectStorageSink operator.

Concretely, when s3a protocol is specified the toolkit uses hadoop-aws client. When cos protocol is specified

the toolkit uses stocator client.

Toolkit Class Diagram

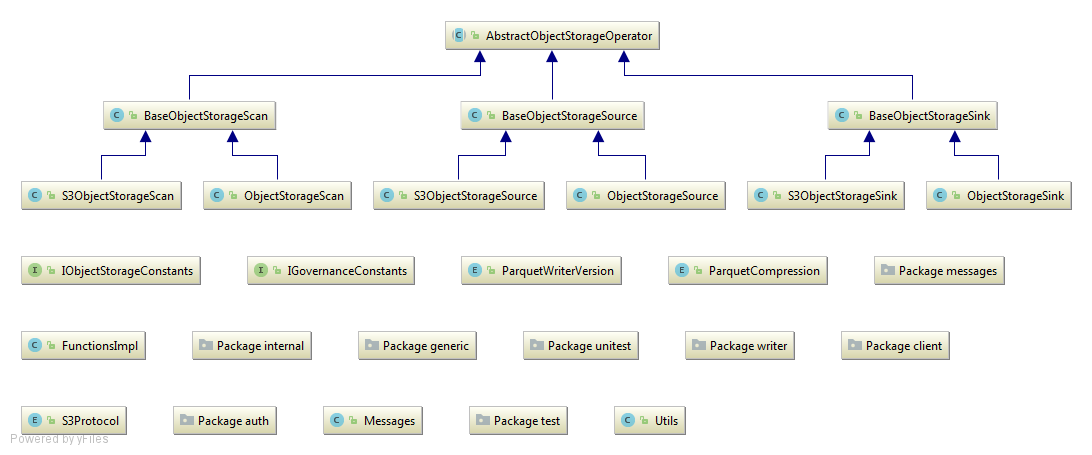

The following class diagram represents main toolkit classes and packages:

As might be seen from the diagram above there are three level of abstraction in the

toolkit implementation:

As might be seen from the diagram above there are three level of abstraction in the

toolkit implementation:

AbstractObjectStorageOperatorclass contains the logic that is common for all toolkit operators, such as COS connection establishment according to the specific protocol (client).BaseObjectStorageScan\Source\Sinkclass contains the implementation which is common for the generic and S3-specific Scan\Source\Sink operators.S3ObjectStorageXXX\ObjectStorageXXXclass contains parameters specific for S3 and generic operators respectively. Note, that this layer almost doesn’t contain business logic.

Sink Operators Implementation Details

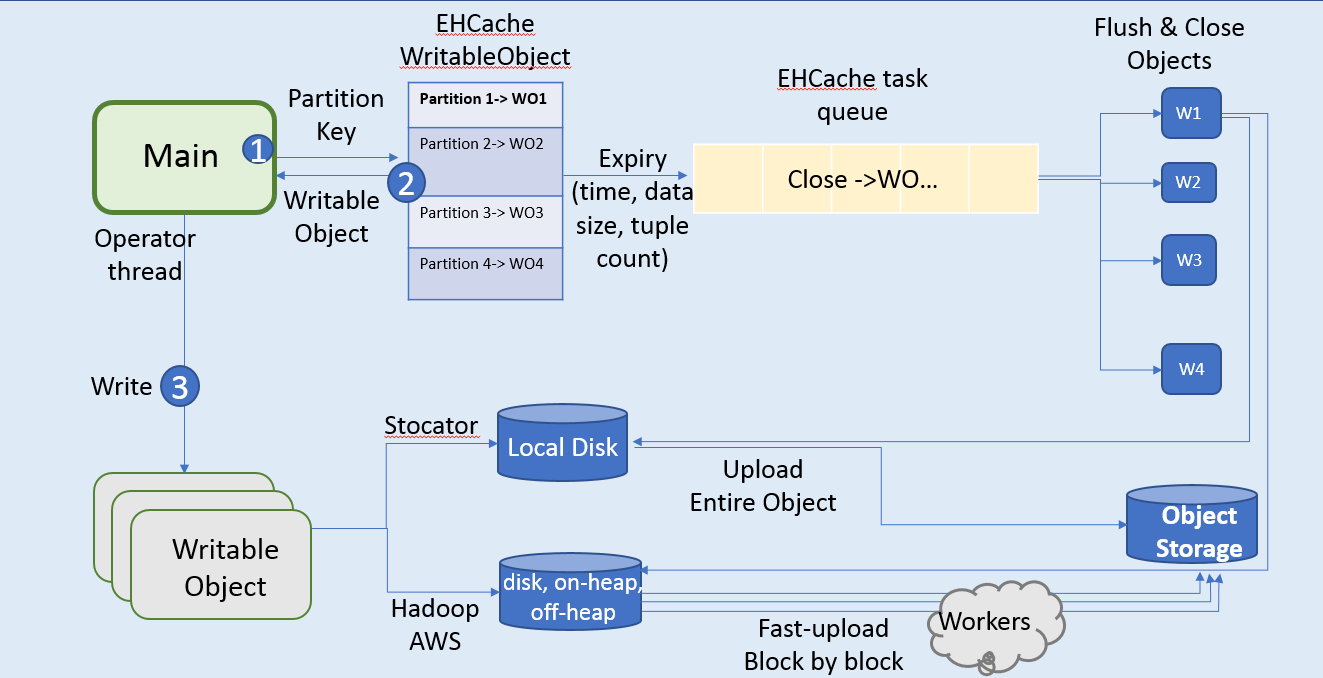

The following diagram represents the ObjectStorageSink and S3ObjectStorageSink

operators architecture:

Note, the operator implementation is based on EHCache3.

EHCache3 is used for the active objects asynchronous rolling policy management.

At any given point of time, EHCache contains map with partition as a key

and corresponding OSWritableObject

as a value. See OSObjectRegistry

class for concrete details related to EHCache utilization by the operator.

For each tuple the operator main thread is used to write tuples to the to the OutputStream managed by the specific

(hadoop-aws or stocator) client. On the rolling policy expiration (either by time, data size or tuple count),

the new object has been created by the main thread for the upcoming tuples,

the old object has been removed from the EHCache and closed synchronously on a separate thread,

and the new object has been inserted into EHCache.

Toolkit Java-based Tests

Current java-based test suite covers ObjectStorageSink operator only.

Yet, its important to mention, that the test suite might be easily extended

with additional tests for the ObjectStorageSink and other operators.

For more details about the tests and functionality they cover see the

Object Storage Toolkit Java-based Tests

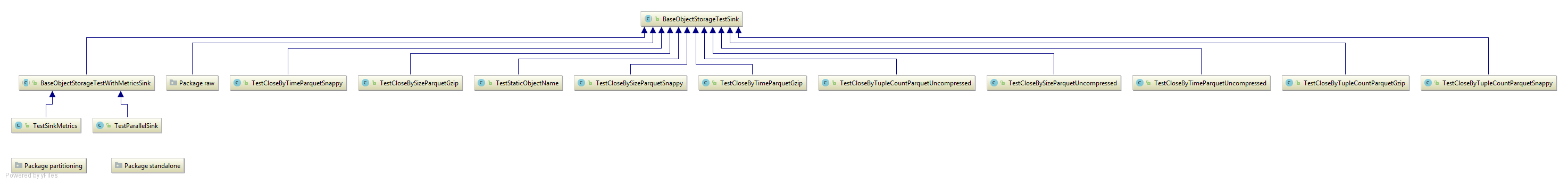

ObjectStorageSink Tests Class Diagrams

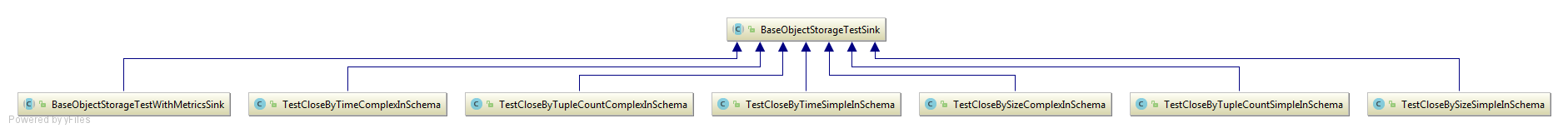

ObjectStorageSink tests can be divided to the two groups by the storage format:

-

tests for the

rawstorage format The following class diagram represents classes involved in therawstorage format testing.

BaseObjectStorageTestSinkis the base class for all Sink operator tests. Each specific test implements/overwrites methods specific for it only. -

tests for the

parquetstorage format The following class diagram represents classes involved in theparquetstorage format testing.

BaseObjectStorageTestSinkis the base class for all Sink operator tests. Each specific test implements/overwrites methods specific for it only.